Comprehensive independent benchmark comparing leading ML frameworks for quantitative finance and specialized machine learning applications.

Popular machine learning tools like JAX, TensorFlow, and PyTorch have made significant strides in ML applications with small-scale computation graphs featuring large nodes. However, quantitative finance models are fundamentally different.

MatLogica AADC is a pioneering framework initially designed for quantitative finance workloads that also excels for certain machine learning use cases. AADC leverages advanced graph compilation techniques and enables automatic differentiation (backpropagation) to achieve remarkable performance gains over JAX, PyTorch, and TensorFlow.

Why quantitative finance needs different optimization strategies

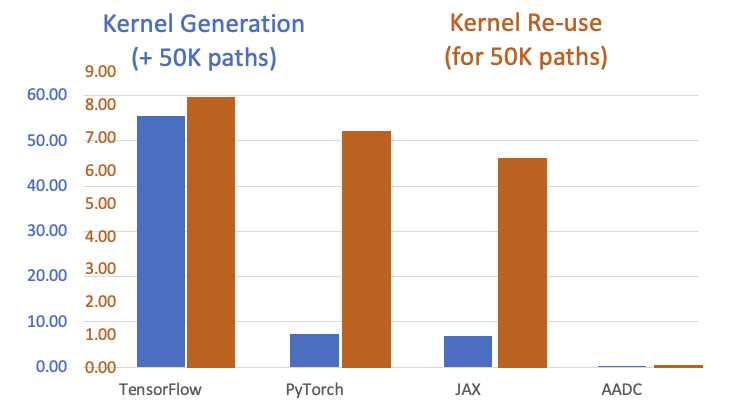

Both the compilation time of the valuation graph and the performance of the resulting code (kernel) are integral parts of the execution process. Graph compilation time is often neglected in academic benchmarks, resulting in promising test results that become unviable for production use in real-world derivatives pricing.

Rigorous testing on realistic quantitative finance workloads

This test case represents typical quantitative finance workloads requiring both speed and accuracy for derivatives pricing. When graph compilation time is considered (critical for real-world scenarios where portfolios change), MatLogica AADC delivers exceptional performance.

Performance comparison across all frameworks

| Framework | Time | Comparison |

|---|---|---|

| MatLogica AADC | 0.166 seconds | Baseline (Fastest) |

| JAX | 6.82 seconds | 43x slower |

| PyTorch | >6.82 seconds | Significantly slower |

| TensorFlow | >6.82 seconds | Significantly slower |

All measurements for 50,000 Monte Carlo paths with 500 time-steps

Figure 1: AADC Performance vs ML Frameworks

In quantitative finance applications, tasks such as derivatives pricing, live risk management, stress testing, VaR, and XVA calculations allow the compiled graph to be reused as calculations remain the same and only input parameters change.

When reusing kernels, MatLogica AADC outperforms JAX by 64x. This is particularly beneficial for financial simulations with many nodes performing smaller scalar computations.

Quantitative finance and specialized machine learning

MatLogica AADC's superiority for specialized ML is demonstrated in academic papers by Prof. Roland Olsson, showing how automatic programming can synthesize novel recurrent neurons designed for specific tasks:

Novel recurrent neurons for time series

Event prediction and monitoring

Machine learning for manufacturing

AADC enables evaluation of approximately 10 million candidate neurons for each dataset, allowing researchers to automatically develop new neuron architectures delivering better accuracies than Transformers or LSTMs. This rapid screening cannot be achieved using JAX or PyTorch.

Technical advantages for quantitative finance workloads

Critical for changing portfolios and real-time pricing scenarios where recompilation is frequent

Full hardware optimization for modern CPUs with advanced vector extensions

Safe parallel execution for maximum performance on multi-core systems

Supports well-known NumPy ufuncs for native Python integration

Compile computation graphs written in mix of C++ and Python

Full backpropagation support for gradient calculations

The future of quantitative computing

As the demand for fast computations grows in quantitative finance and specialized ML applications, AADC provides a solution that outperforms popular best-in-class ML frameworks by orders of magnitude (40-64x) without requiring learning new programming languages or extensive code refactoring.

The complete benchmark report with detailed methodology, additional test cases, and full source code is available for download.

See how AADC can transform your quantitative finance or machine learning workloads with dramatic performance improvements over JAX, PyTorch, and TensorFlow.

Source code available on request for independent verification